Imagine spending months perfecting the content and design of your new online store, filled with carefully selected products that you know your customers will love. The launch day arrives, and you excitedly publish your latest blog post, announcing the grand opening filled with introductory offers and detailed insights into your unique products. However, days pass, and you notice that despite your efforts, the traffic is not picking up, and your post isn’t appearing in Google search results as expected.

Puzzled and concerned, you turn to Google Search Console, only to discover a slew of crawl errors preventing search engines from properly indexing your site. This discovery hits like a cold shower: your content, as well-prepared as it is, remains unseen by your potential audience due to these digital roadblocks. This situation, frustrating yet incredibly common among website owners, underscores the critical need for regular SEO maintenance. Just as you wouldn’t open a physical store with blocked access paths, ensuring clear pathways for search engines is essential for any online presence. Learning how to fix common crawl errors not only boosts your site’s visibility but also enhances your engagement with potential customers, directly impacting your business’s success online.

Proper website maintenance and troubleshooting crawl errors are crucial for ensuring that search engines can seamlessly navigate and index your website. By taking proactive steps to address these issues, you can significantly improve your site’s search engine visibility and performance, drawing more visitors and ultimately enhancing your online business success.

Understanding Common Crawl Errors

To effectively address crawl errors, it’s important to understand the most common types that can affect your website:

1. DNS Errors

DNS errors occur when search engines can’t resolve your website’s domain name to an IP address. It’s akin to a GPS system failing to recognize a road that definitely exists. This could be due to issues with your DNS provider or incorrect DNS record settings. If not resolved, it could prevent your site from being accessed, akin to customers unable to find your bakery due to incorrect road signs.

2. Server Errors

Server errors happen when your server fails to correctly respond to a search engine’s request to access a page. This could be due to server overloads, crashes, or configuration errors. It’s similar to having your bakery’s door jammed shut on the day of a big event; guests arrive but can’t enter.

3. Robots.txt Fetch Errors

These occur when a search engine can’t access your robots.txt file, a crucial component that directs search engines on which parts of your site should and shouldn’t be crawled. Improper configuration here is like misplacing a ‘Welcome’ or ‘Do Not Enter’ sign, leading to confusion about which areas are open to visitors.

How to Diagnose Crawl Errors

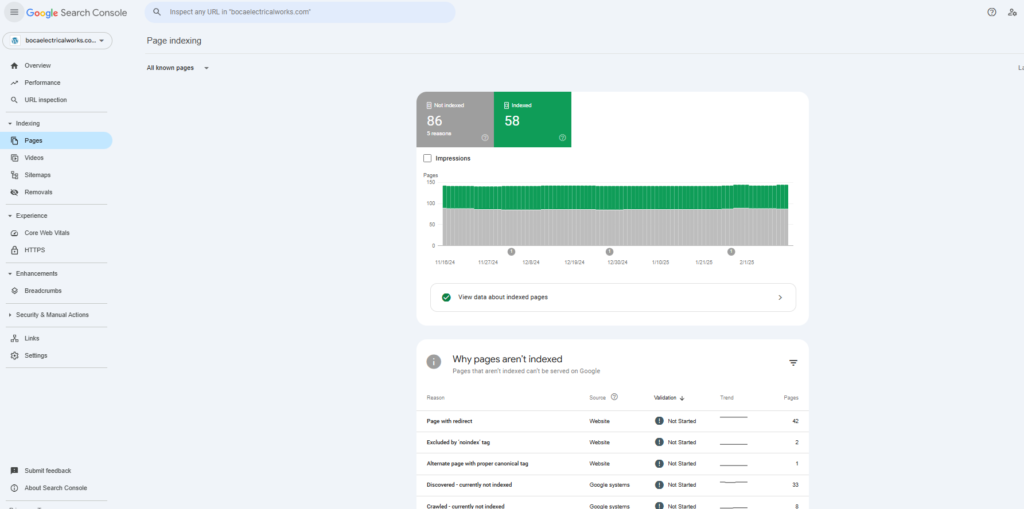

Effectively diagnosing crawl errors is the first step towards fixing them. Google Search Console is an indispensable tool for this purpose, providing a comprehensive platform for webmasters to monitor how their site interacts with Google Search:

1. Access Google Search Console

- Log In: Visit the Google Search Console website and log in with your account details.

- Select Your Property: Choose the correct website property from the list if you manage more than one.

2. Check for Indexing Issues

- Navigate to ‘Pages’: Under the ‘Indexing’ section in the sidebar, click on ‘Pages’. This area provides details about the pages Google has tried to index and any issues encountered.

- Review the Status: In the ‘Pages’ section, you can see which pages are successfully indexed and which ones have issues that might be preventing them from appearing in search results.

3. Utilize the URL Inspection Tool

- Find the URL Inspection Tool: Located at the top of the sidebar, this tool allows you to input a specific URL to see how Google views that page.

- Inspect Specific URLs: Enter the URL of a page you’re concerned about. The tool will provide details on whether the page is indexed, any crawl errors, and the last crawl date.

- Request Indexing: If a page is fixed or you want to prompt Google to re-evaluate a page, you can request re-indexing directly from this tool.

4. Investigate Coverage Issues

- While the sidebar in your screenshot does not explicitly list ‘Coverage’ as seen in some accounts, the ‘Pages’ section under ‘Indexing’ will often provide similar information. Look for any notifications or error messages that indicate problems with page indexing.

5. Document and Address Issues

- Record the Findings: Keep detailed notes of any errors or warnings associated with specific URLs.

- Develop an Action Plan: Based on the errors identified, outline corrective steps, such as modifying your site’s robots.txt file, fixing broken links, or resolving server errors.

6. Monitor Performance Regularly

- Return to the Overview: Regularly check the ‘Overview’ section on the main dashboard to monitor your site’s general health and performance in Google Search. This can give you a high-level view of trends that might indicate broader issues.

By following these steps, you can leverage Google Search Console effectively to identify and resolve crawl errors, thereby improving your website’s visibility and performance in Google search results. Each section in GSC provides critical insights that can help you understand how your site is performing and what steps you need to take to optimize its presence in search results.

Ensuring that search engines can smoothly navigate and index your website involves a structured approach to addressing common crawl errors. Here’s a detailed, step-by-step guide to help you resolve these issues:

Fixing DNS Errors

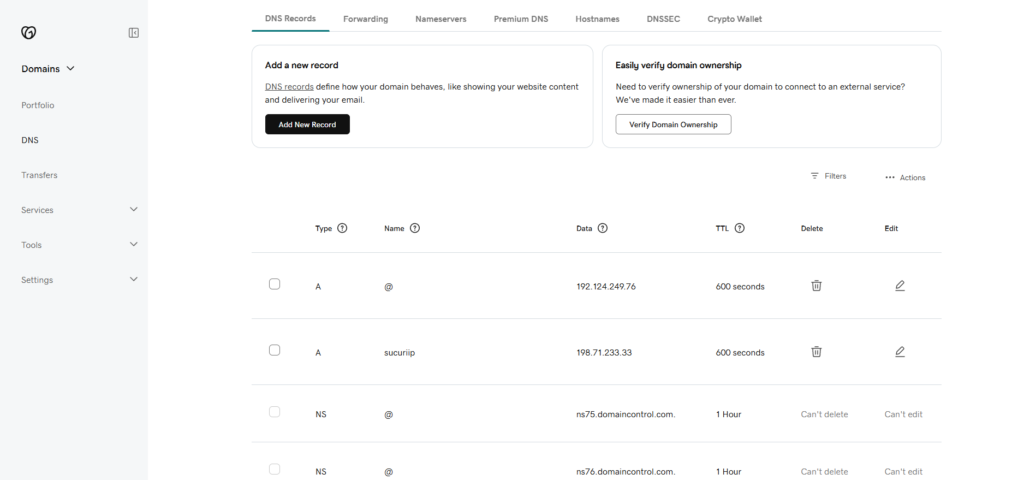

DNS issues can prevent search engines from finding your site. Here’s how to ensure your DNS settings are correct:

-

Verify DNS Configuration

- Access your domain registrar’s control panel: Log in to the website where you registered your domain.

- Check DNS records: Navigate to the DNS settings or Domain Management area. You should see records such as A, AAAA, and CNAME.

- Confirm record settings: Ensure that the A record points to your server’s correct IP address. For domains using subdomains, check that CNAME records point to the appropriate domain name (e.g.,

www.example.comCNAME should point toexample.com).

-

Switch to a Reliable DNS Provider:

- Research providers: Look for DNS hosting that offers high uptime, fast resolution, and strong support. Providers like Cloudflare, Google DNS, and AWS Route 53 are popular options.

- Change DNS settings: In your domain registrar’s panel, update the DNS server settings to the new provider’s nameservers. This change can take anywhere from a few minutes to 48 hours to propagate.

Resolving Server Errors

Server errors can drastically affect your website’s availability and performance. Here’s how to optimize your server:

-

Optimize Server Performance

- Evaluate your current hosting plan: Check if your current server resources (CPU, RAM, bandwidth) meet your website’s demands, especially during peak traffic.

- Upgrade resources or hosting plan: If your assessment shows that your server is consistently at or near capacity, upgrade your hosting plan or add resources.

- Implement performance enhancements: Use techniques like caching, content delivery networks (CDN), and database optimization to improve server response times.

-

Regular Maintenance

- Schedule server updates: Regularly update your server’s software (OS, CMS, databases) to the latest versions to fix bugs and improve performance.

- Monitor server health: Use tools like Nagios, Zabbix, or New Relic to monitor server performance and get alerts for downtime, high load, or other potential issues.

- Conduct regular audits: Periodically review your server configuration and performance logs to identify and rectify potential issues that could lead to server errors.

Correcting Robots.txt Errors

The robots.txt file guides search engines on what they should and should not index. Here’s how to ensure it’s set up correctly:

-

Audit Your Robots.txt File

- Locate your robots.txt file: This file should be in the root directory of your website (e.g.,

https://www.yoursite.com/robots.txt). - Review the file: Ensure that it correctly allows or disallows access to parts of your website. For example, you should disallow access to admin areas while allowing access to public directories.

- Locate your robots.txt file: This file should be in the root directory of your website (e.g.,

-

Test Your Robots.txt File

- Use Google Search Console’s Robots Testing Tool: Log into your Google Search Console account, go to ‘Crawl’ > ‘robots.txt Tester’.

- Run the tester: Paste the contents of your robots.txt file into the tester or upload the file. This tool will show you if there are any errors or warnings about how search engines interpret your file.

- Make necessary adjustments: Based on the feedback from the tester, make corrections to the file and retest until there are no errors.

By following these detailed steps, you can resolve common crawl errors effectively, ensuring that search engines can access and index your site without issues. This proactive approach not only enhances your site’s SEO performance but also improves user experience by ensuring your site’s content is accessible and performs well.

Tips for Ongoing Maintenance of Your Website

Maintaining the health of your website is crucial for ensuring it continues to perform well in search results and provides a good user experience. Here are some elaborated tips on how to keep your website in top shape by preventing and managing crawl errors:

Regular Monitoring

Consistent monitoring of your website helps you detect and fix issues before they significantly affect your site’s performance. Here’s how you can do it:

-

Set Up Alerts in Google Search Console

- Google Search Console provides a variety of alerts for different issues including crawl errors, security issues, and indexing problems. Make sure you have email notifications enabled so you get alerts directly in your inbox.

- Regularly log into Google Search Console to check the “Coverage” and “Performance” reports where you can see issues that might not trigger an alert but are worth addressing.

-

Monitor Your Site’s Uptime

- Use tools like Uptime Robot, Pingdom, or New Relic to monitor your website’s availability. These tools can alert you if your site goes down or if there are significant fluctuations in response time, which could indicate server issues.

-

Analyze Logs for Errors

- Regularly check your server logs for unusual activities or errors such as frequent 404 errors, 500 internal server errors, or security breaches. Identifying these issues early can prevent them from escalating.

Continual Updates and Testing

Keeping your website updated and testing major changes are critical steps in maintaining its health:

-

Regularly Update Content and Structure

- Update your sitemap whenever new pages are added or removed to ensure search engines can more efficiently crawl your website. This is particularly important for large websites or those that frequently add new content.

- Periodically review and update your robots.txt file to ensure that it still aligns with the parts of your site you want to be crawled and indexed. As you add new directories or features that should be hidden from search engines, make sure to add these to the robots.txt file.

-

Test Changes Before Going Live

- Before implementing significant changes to your website, such as a redesign or new functionality, use a staging environment to test these changes. Check for crawl errors by using tools like Screaming Frog SEO Spider or DeepCrawl.

- Validate any new code, especially JavaScript, which can sometimes introduce SEO issues if not implemented correctly. Tools like Google’s Mobile-Friendly Test or Rich Results Test can help ensure that your code changes are search engine friendly.

-

Keep Software Up to Date

- Regularly update your website’s CMS, plugins, and any other software components. Updates often include security patches and fixes for bugs that could cause crawl errors or other issues.

-

Conduct Regular SEO Audits

- Schedule regular SEO audits to evaluate your website comprehensively. This includes checking for broken links, ensuring that all pages have SEO-friendly URLs, meta titles, and descriptions, and that they follow the latest SEO best practices.

Integrating these ongoing maintenance practices into your regular website management routine can minimize the risk of crawl errors and other issues that could impact your SEO and overall website performance. This proactive approach not only helps in maintaining your search engine rankings but also enhances the experience for your visitors.

Frequently Asked Questions

1. How do I know if crawl errors are affecting my site’s traffic?

You can check the impact of crawl errors on site traffic by comparing fluctuations in your traffic with the timing of detected crawl errors in Google Search Console. If errors coincide with traffic drops, they may be affecting your visibility.

2. Can crawl errors cause my website to be penalized by Google?

While crawl errors themselves do not directly result in penalties from Google, they can impair your site’s ability to be effectively indexed and ranked, indirectly affecting your SEO performance.

3. What specific steps should I take if I find a DNS error in Google Search Console?

If a DNS error is reported in Google Search Console, first verify that your DNS records are correct and active with your hosting provider. If the records are accurate, consider switching to a more reliable DNS provider.

4. How frequently should I update my robots.txt file?

Update your robots.txt file whenever you make significant changes to your website structure, such as adding new directories or changing the areas you want to be indexed or kept private. Review the file at least every six months to ensure it still reflects your current website architecture.

How Can Jelly Ann Can Help You?

Navigating SEO and technical website issues can be overwhelming, especially when you’re focused on growing your business. If crawl errors and other SEO challenges are holding you back, Jelly Ann Can Help You. With customized strategies and expert guidance, I can help optimize your site for search engines, clearing the way for improved visibility and traffic.

Contact me today to start solving these complex issues together!

0 Comments